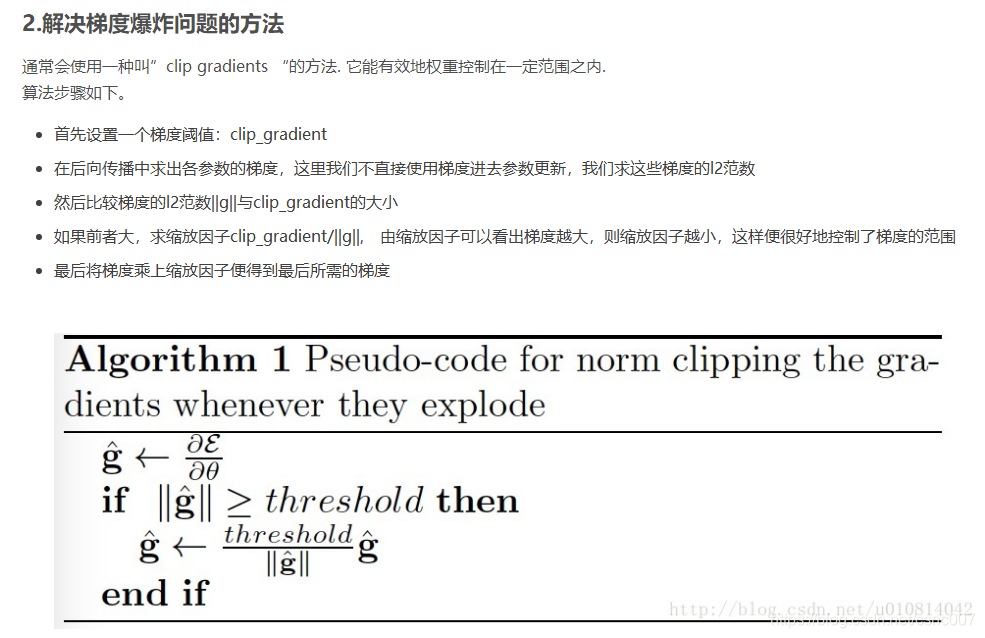

![FSDP] FSDP produces different gradient norms vs DDP, and w/ grad norm clipping creates different training results · Issue #88621 · pytorch/pytorch · GitHub FSDP] FSDP produces different gradient norms vs DDP, and w/ grad norm clipping creates different training results · Issue #88621 · pytorch/pytorch · GitHub](https://user-images.githubusercontent.com/46302957/200437875-f282d44f-b62b-4a25-baf3-a06b2b2ce236.png)

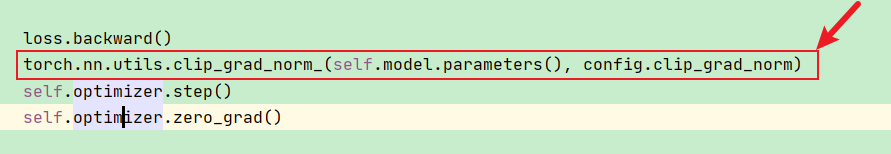

FSDP] FSDP produces different gradient norms vs DDP, and w/ grad norm clipping creates different training results · Issue #88621 · pytorch/pytorch · GitHub

![PDF] The Introspective Agent: Interdependence of Strategy, Physiology, and Sensing for Embodied Agents | Semantic Scholar PDF] The Introspective Agent: Interdependence of Strategy, Physiology, and Sensing for Embodied Agents | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/e7a5d14e1b338a86267ad8cc80172a6e9fb51603/11-Table2-1.png)

PDF] The Introspective Agent: Interdependence of Strategy, Physiology, and Sensing for Embodied Agents | Semantic Scholar

Hyperparameters used for training. One sensitive parameter is ppo epoch... | Download Scientific Diagram

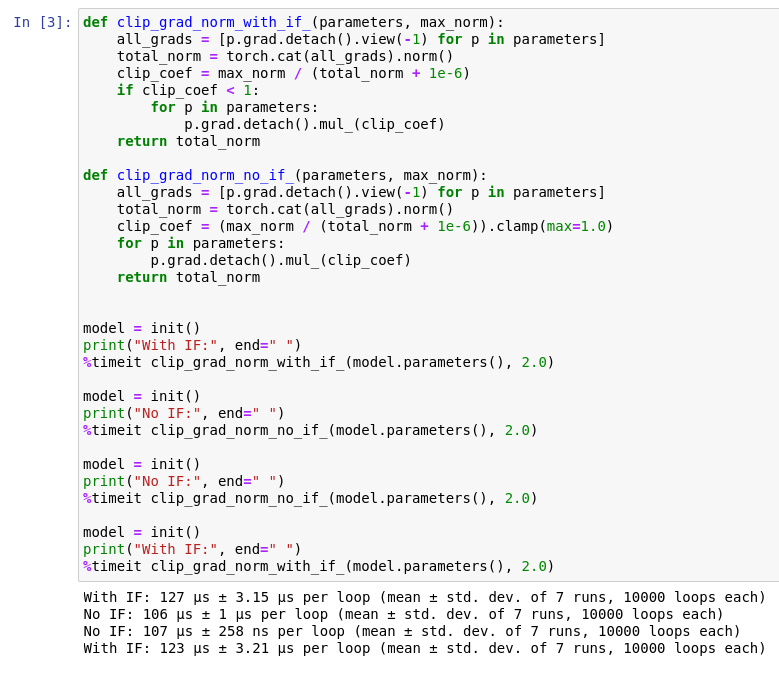

Slow clip_grad_norm_ because of .item() calls when run on device · Issue #31474 · pytorch/pytorch · GitHub

FutureWarning from clip_grad_norm_ when training model in Python · Issue #687 · ultralytics/ultralytics · GitHub

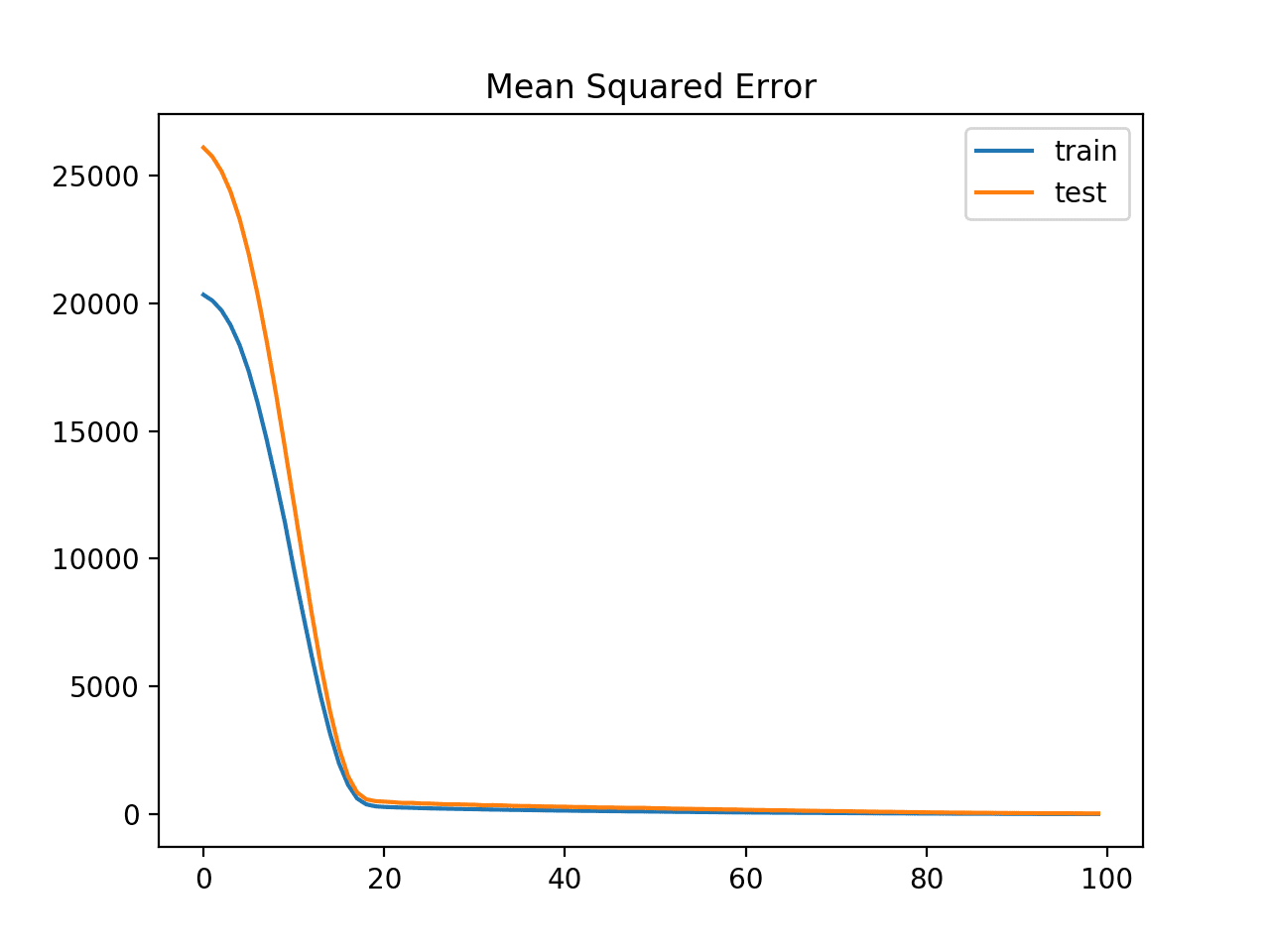

![FSDP] FSDP produces different gradient norms vs DDP, and w/ grad norm clipping creates different training results · Issue #88621 · pytorch/pytorch · GitHub FSDP] FSDP produces different gradient norms vs DDP, and w/ grad norm clipping creates different training results · Issue #88621 · pytorch/pytorch · GitHub](https://user-images.githubusercontent.com/46302957/200437760-cb9df95e-0aa3-435a-a574-bed5bd4ff14e.png)