Colab IPython Interactive Demo Notebook: Natural Language Visual Search Of Television News Using OpenAI's CLIP – The GDELT Project

![P] I made an open-source demo of OpenAI's CLIP model running completely in the browser - no server involved. Compute embeddings for (and search within) a local directory of images, or search P] I made an open-source demo of OpenAI's CLIP model running completely in the browser - no server involved. Compute embeddings for (and search within) a local directory of images, or search](https://external-preview.redd.it/W9YcFgBnfZDMlabAtrfk4CNq8IjFz7gmrlOz2NkSIKs.png?format=pjpg&auto=webp&s=7617eef5cbad7a9c0399650933d416ae43c14740)

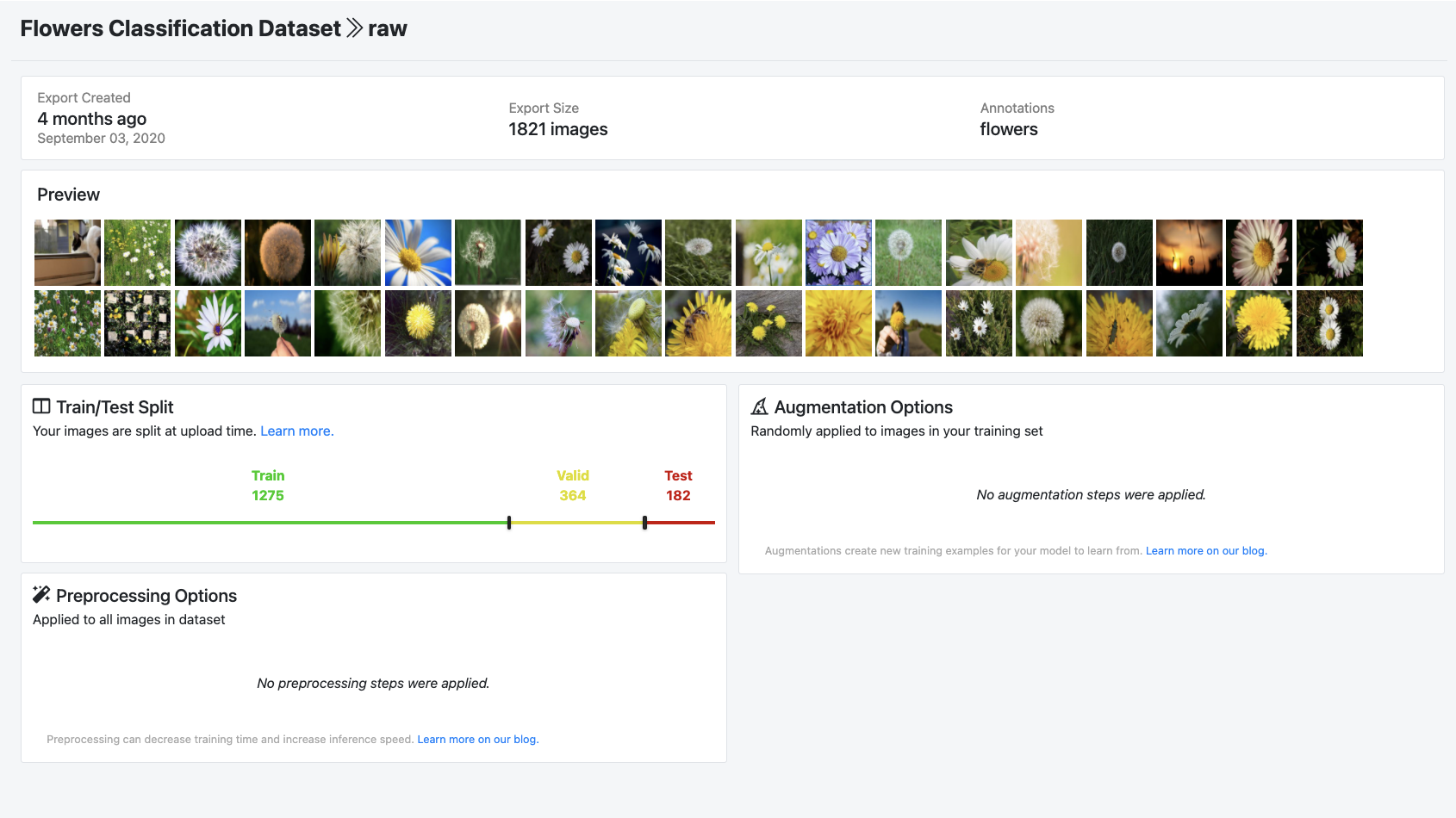

P] I made an open-source demo of OpenAI's CLIP model running completely in the browser - no server involved. Compute embeddings for (and search within) a local directory of images, or search

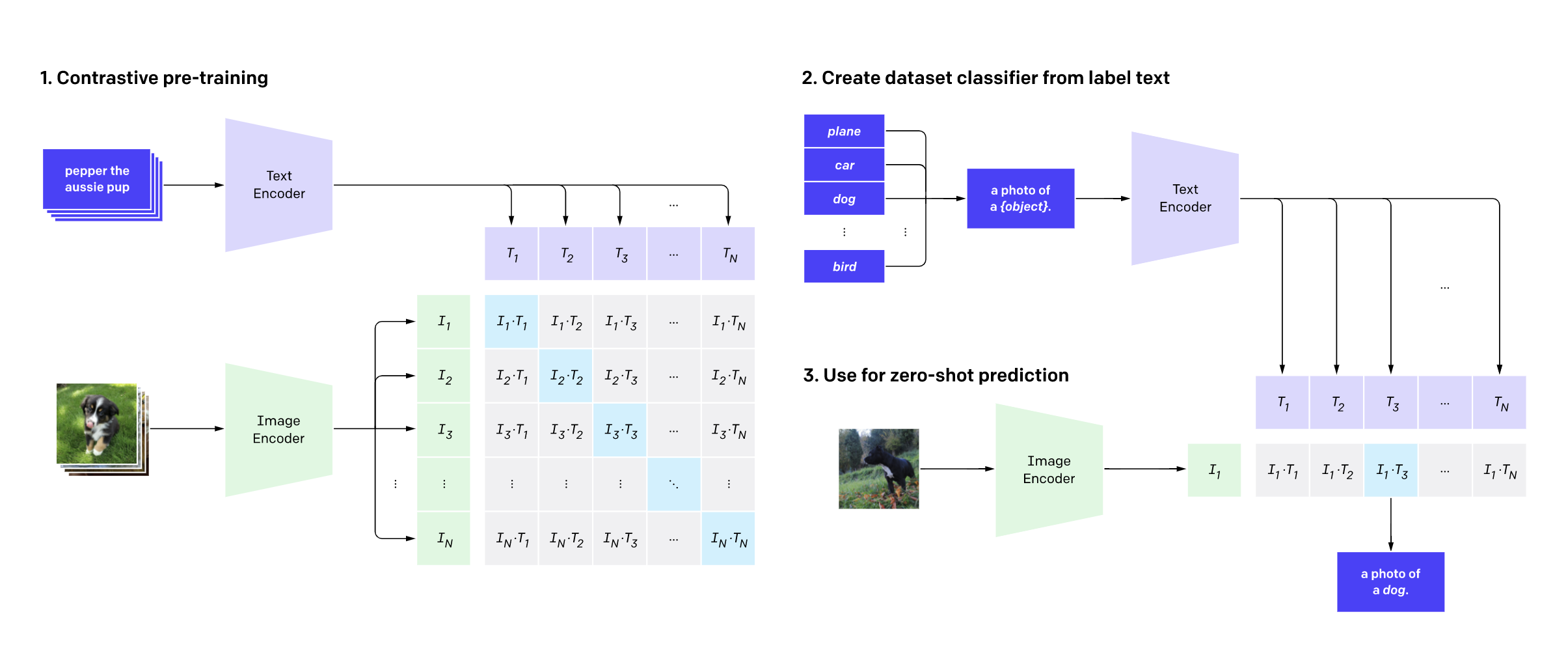

OpenAI and the road to text-guided image generation: DALL·E, CLIP, GLIDE, DALL·E 2 (unCLIP) | by Grigory Sapunov | Intento

Makeshift CLIP vision for GPT-4, image-to-language > GPT-4 prompting Shap-E vs. Shap-E image-to-3D - API - OpenAI Developer Forum

.png)

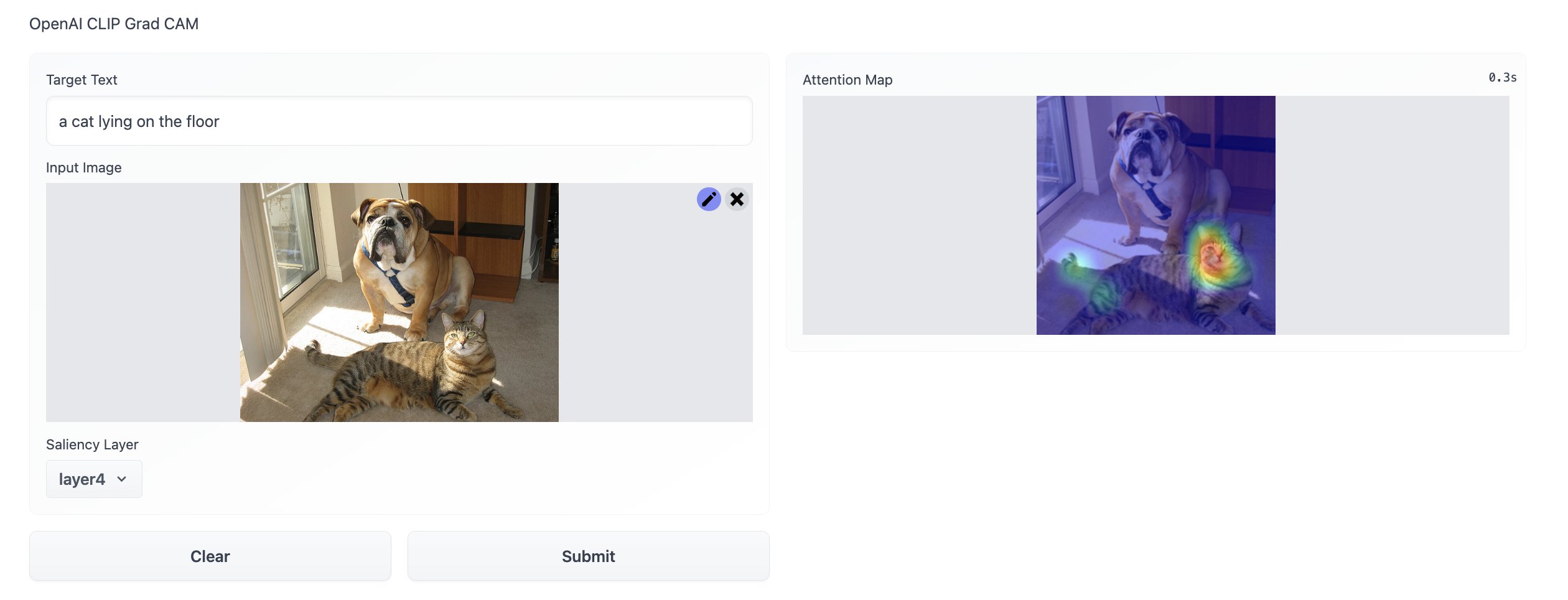

![P] OpenAI CLIP: Connecting Text and Images Gradio web demo : r/MachineLearning P] OpenAI CLIP: Connecting Text and Images Gradio web demo : r/MachineLearning](https://preview.redd.it/qstepjl4o6q61.jpg?vthumb=1&s=243f652b639e962e47959f166efabc3d083cf514)